Developer toolboxes are the lifeline for architects to thrive within the software development life cycle (SDLC). A toolbox can comprise a number of solutions, including programming languages, and HTTP servers, load balancers, and databases (both relational and NoSQL). Within modern, AWS cloud services and microservices, developers can use messaging to ensure reliable, speedy communications between services.

Within AWS Microservices, lightweight mechanisms broadcast messaging to endpoints, where components respond to the message. Only services that are subscribed to the threat will be able to receive and reply to messages. Messaging is a great way to integrate or loosely couple systems so that they can communicate with one another.

What is Messaging?

Within the AWS cloud, messaging is an asynchronous way to communicate from service-to-service within Microservice architectures. While it is not a steady stream of communication, messaging tends to be simultaneous, in that components will receive the communication immediately after it is sent. There are a number of purposes for messaging in Microservices, including:

- Develop event-driven architectures

- Decouple applications for the sake of improved performance, reliability and scalability

Queue and the Topic Serve as the Main Resources in AWS Microservice Messaging

As opposed to a database service, where the resource is a table (think SQL), the queue and the topic are the main messaging resources. Queues serve as a place where services retrieve messages, on an immediate basis. Applications that place messages in a queue are known as message producers. A message consumer retrieves the message.

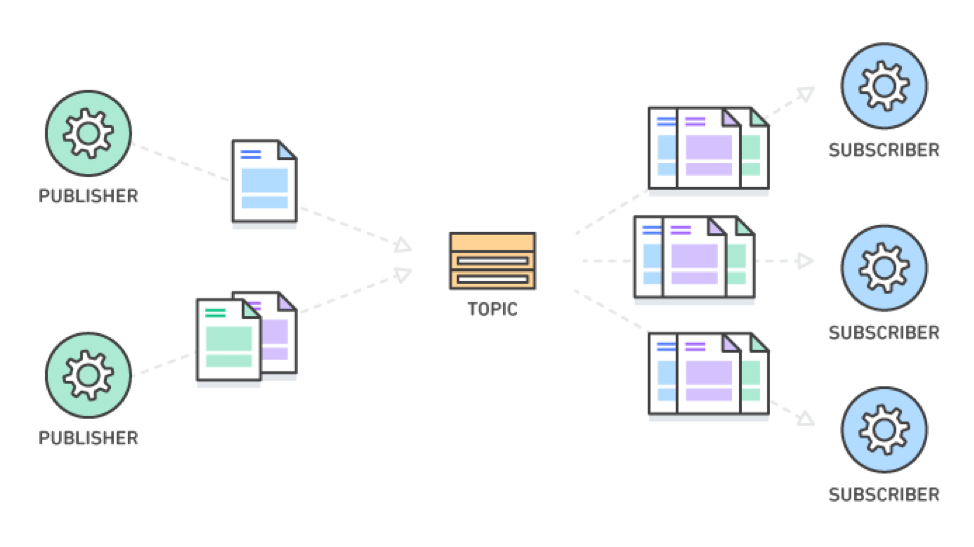

A topic is like a message board. Messages created by message produces end up on the topic thread. Once on the topic, subscribed recipients are notified of the message. A topic publisher broadcasts topics, while a topic subscriber manages recipient subscriptions to topics.

Courtesy of AWS

When to Use Messaging in AWS Microservices

Service-to-service communication – When you have two services that must communicate with one another, for example, when an eCommerce website needs to communicate with its customer relationship management (CRM), the communication is from frontend to backend. Message queues can relay communications between frontend website to the backend CRM where they are consumed. You can also use a workload distribution tool such as a load balancer in between the CRM and the website to call API actions.

Asynchronous work item backlogs – messages that contain actions that take longer to process can be put into a queue for subscribers to execute asynchronous cancellations. An example is an airline booking cancellation system. In some instances, cancellations take more time than immediate transactions, e.g. up to a minute. Pending cancellations may add up, at which point you would need to poll (check the status of) and execute cancellations.

State change notifications – architects can use messages when a system needs to be updated with state changes to execute certain items. For example, an eCommerce website may have inventory that it needs to keep track of. Programmers can publish notifications so that any applications subscribed to the topic will respond by triggering certain actions. For example, when an item is close to being sold out, the eCommerce backend will order more. When it is out of stock the frontend will stop offering the product. Architects can program the system to keep track of queries that signal some kind of state change.

When Messaging Should Not Be Used in AWS Microservices

When selecting only certain messages – when you only need select messages from a queue, for example, with only certain attributes, or that match certain queries, don’t use AWS Microservices messaging. Certain messages that users are not polling for may get stuck in the queue. Message routing and filtering can be included, however, since subscribers evaluate them in the queue or topic.

Very large messages or files – messages that are larger than tens to hundreds of kilobytes may not be ideal for messaging protocols. AWS recommends using a dedicated file (or blog) storage system that has the support for uploading larger chunks of data. The system will pass a reference to an object to the corresponding object. If anything gets held up, dedicated file (or blog) storage such as Amazon A3 can retry or resume downloads.

Characteristics of Messages in AWS Microservices

While it utilizes UDP packets and direct TCP connections, which occurs frequently, enterprise messaging occurs at a higher level. Messages lend themselves to collaboration for subscribers who work across business functions. Neither the publisher nor recipient service is made aware of the message sender or recipients. Architects and SDLC teams will typically send payloads within messages. Payloads may be in XML, JSON or binary data format.

AWS allows users to send messages with a set of attributes, including structured metadata items. Examples of attributes include: geospatial data, filters, identifiers, signatures and timestamps. The attribute, while not a part of the message, will enable the recipient to enact a certain process. Users may append attributes that aid in the structure of push notifications.

Key Features of AWS Microservice Messaging

Messaging with added bells and whistles (i.e., enhancements) can add efficiency to a Microservices architecture. Beyond produce-consume and publish-subscribe, a proper Messaging implementation can exhibit the following features:

Push or pull delivery – programmers can implement messaging that continuously “pulls” – or queries – for new messages from a service. A push feature will notify subscribers whenever there is a new message. Within push or pull delivery, there are three ways to deliver messages:

Packet Protocol – a delivery method where the packet contains units of the message. Push or pull delivery of messages.

HTTP Call – messages can be sent via HTTP calls made to your API endpoint.

Long Polling – You can also use long-polling, which combines both push and pull functionality. A mechanism HTTP holds a connection open for long enough to transfer messages.

Dead letter queues – whenever a message can’t be processed, the system will flag it as a dead-letter queue. Architects can set a predetermined number of attempts before a message goes to the dead-letter queue. Dead letter queues will help to keep your connection open and prevent messages from congesting CPU that will never be delivered.

Delay queues and scheduled messages – Users may want to hold off from a service processing of a message. Users may also postpone and schedule messages for a later date and time. Certain behaviors are also programmable, such as putting a delay on all message deliveries.

Set message delivery options –a variety of options are available that affect the delivery of messages, such as prioritizing messages so they jump the queue for delivery in front of other messages. Recipient acknowledgement of delivery may also be triggered. You can also determine whether messages will be ordered or unordered.

Some Things to Consider When Designing Your AWS Microservices Messaging

There are a few points to consider when designing your AWS Microservices.

- Do your messages need to be delivered in the sequential order that they are created?

- Do you need certain messages to be expedited as priority?

- Do you have a process in place for when messages go undelivered?

- Is parallelization an option?

Threat Model Your AWS Microservices Architecture for Secure Message Delivery

Amazon SQS and Amazon SNS are the applications you will need in order to get started with messaging on AWS Microservices. ThreatModeler, a top-rated, automated threat modeling tool that can help you to secure your cloud AWS Microservices Architecture. ThreatModeler is ideal for cloud native applications for its ability to scale across thousands of threat models, and for its ability to provide end-to-end security.

To learn more about how to leverage threat modeler’s Visual, Agile, Secure Threat Modeling (V.A.S.T.) methodology for your DevSecOps environment, book a demo to speak to a ThreatModeler expert today.